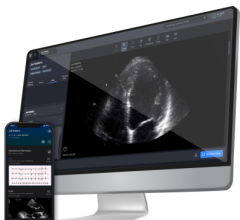

The artificial intelligence-driven Caption Guidance software guides point of care ultrasound (POCUS) users to get optimal cardiac ultrasound images. The AI software is an example of a FDA-cleared software that is helping improve imaging, even when used by less experienced users.

The number of Federal Drug Administration (FDA)-approved AI-based algorithms is significant and has grown at a steady pace in recent years. The Medical Futurist’s infographic provides a handy, if slightly dated, overview of the field. These algorithms are largely aimed at assisting medical professionals execute existing workflows more efficiently in a variety of fields, the two most popular being cardiology and radiology. As to the latter, the American College of Radiology (ACR) maintains a list of FDA-cleared AI algorithms in order to keep up with the growth in the field. This article provides a high-level overview of recent happenings in the world of AI and attempts to identify some of the associated liabilities.

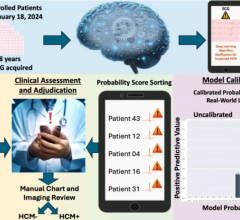

Example of an FDA-cleared Autonomous AI Diagnostic Software

This ever-growing list of authorized algorithms includes a particularly notable entry in IDx’s IDx-DR retinal diagnostic software, which is indicated for use with the Topcon TRC-NW400 retinal camera. On its own, the TRC-NW400 is designed to provide images of the retina and anterior segment of the human eye. The IDx-DR software, when integrated into the camera, is designed to detect more than mild diabetic retinopathy in patients diagnosed with diabetes mellitus. It does so by transmitting specific patient images selected by the user to IDx’s servers. Once received, the images are input into AI software, and the diagnostic results are then returned to the user and communicated to the patient. The paradigm-shifting nature of this system cannot be understated, as the anticipated user will not need prior ocular imaging experience save for a modest training program. Despite some initial resistance from the ophthalmological community, the American Diabetes Association now recognizes this system as an alternative to traditional screening approaches.

Recent Regulatory Updates Regarding Artificial Intelligence

Given this authorization and subsequent adoption by the medical community, it is apparent that autonomous AI can be successfully incorporated into existing medical devices. This trend is certain to continue, most immediately in the medical fields involving imaging. In February 2020, the FDA devoted a public workshop to the topic entitled: “Evolving Role of Artificial Intelligence in Radiological Imaging.”

The presentations at this workshop provided valuable insight into the emerging trends in the future use of AI and its incorporation into medical devices and practice. Based on these discussions, it does not appear that a wave of autonomous devices with incorporated AI systems similar to the IDx-DR are poised to take over the radiological imaging field. However, there are many systems being tested in the computed tomography (CT) space with the potential to generate diagnoses with such a level of confidence that no human needs to view the underlying images.

The recently FDA approved Caption Guidance AI system is more representative of the AI applications coming in the near future. Authorized in February 2020, just days before the conference mentioned above, the Caption Guidance software is designed to function as an accessory to the uSmart 3200t Plus ultrasound system. This software provides real-time guidance to the user in order to allow them to capture diagnostic-level echocardiographic views and orientations. In one of the studies underlying the system’s approval, eight registered nurses were able to obtain imaging assessing left and right ventricular size, left ventricular function and non-trivial pericardial effusion all with greater than 92% sufficiency. The gains in efficiency and access to care that similar systems could bring to the medical field are boundless.

Risk Profile for Integrating AI into Medical Devices

As seen with the IDx-DR retinal diagnostic software, integrating AI-based algorithms into medical devices has the potential to be extremely beneficial to physicians and patients. Those expected benefits include, among other things:

• Increased efficiency in treating and / or diagnosing patients by giving physicians the ability to focus on diagnoses and procedures that require greater skill and judgment;

• Increased diagnostic accuracy;

• Earlier detection of disease (particularly as access to care is improved);

• Improved treatment regimens; and,

• Lower healthcare costs.

However, there are also several risks associated with integrating AI into medical devices used to diagnose and treat patients. Some of the major risks include:

• Potential liability for harm to patients;

• The unauthorized use of private health information and HIPAA violations; and,

• The potential for reduced physician and patient medical decision making.

Potential Liability for Harm to Patients From AI

Given that AI has only recently been integrated into certain medical devices, the courts have yet to grapple with liability involving medical AI. However, the existing legal framework around medical malpractice suggests that the safest way for physicians to use medical AI is as a confirmatory tool to support a decision to follow the standard of care. However, as AI evolves, it has the potential to perform better than even the most skilled and knowledgeable physicians in certain fields. When that happens, certain AI integrated medical devices will likely become the standard of care and the law will require that physicians and other health care providers use them. If the physician does not use the appropriate AI integrated medical device or disregards its diagnosis and/or recommended treatment and a patient is injured as a result, the physician may incur liability. Conversely, if the physician follows the recommendation of the standard of care AI integrated medical device and the recommendation turns out the be wrong, the physician may avoid liability.

The question then arises, is the medical device company liable in the scenario where a physician follows the recommendation of an AI integrated device that turns out to be wrong and a patient is injured as a result? IDx certainly believes that is a possibility and has purchased liability insurance to cover any patient injuries. As AI develops into the standard of care, society may see a shift in responsibility, at least in part, from the diagnosing or treating physician to the medical device company when an injury occurs (beyond the existing scope of products liability claims).

Unauthorized Use of Protected Healthcare Information and HIPAA Violations From AI

The development of AI depends upon on researchers gaining access to large sets of health data from thousands of patients. AI developers need massive amounts of data in order to “train” AI software to properly predict diagnoses, prognoses and treatment responses for future patients. As the reliance on AI increases, it is likely that individual patient data will be analyzed in bulk to inform the care of other patients. However, the use of individuals’ patient’s health information (PHI) to diagnose another patient’s diseases and inform treatment options has legal and ethical concerns related to privacy.

In the United States, the Health Insurance Portability and Accountability Act of 1996 (HIPAA) Privacy Rule standards provide certain restrictions as to how a healthcare provider and other “covered entities” may use a patient’s health information. A major goal of the Privacy Rule standards is to ensure that the confidentiality and privacy of health information is properly protected while facilitating the flow of health information for appropriate purposes such as treatment and public health. A healthcare provider, such as a medical practice, can only use and disclose PHI without an individual’s authorization for certain limited purposes. Those purposes include, but are not limited to:

• The disclosure is made for treatment, payment, or healthcare operations;

• The disclosure is related to a judicial proceeding;

• The disclosure is required by law; or,

• The disclosure is related to certain research or public health purposes.

While they may seem straightforward, the Privacy Rule standards and other HIPAA rules are complex and fraught with pitfalls, especially in the ever-changing landscape of AI. If a healthcare provider intends to use AI-integrated medical devices in its practice, it should closely evaluate HIPAA and other applicable state and federal privacy laws. If certain laws are violated, the consequences can be severe. HIPAA violations can result in significant financial penalties, criminal sanctions and civil litigation.

AI Impact on Reduced Physician and Patient Decision-Making

The use of AI is likely going to change the liability landscape, which in turn may impact the types of treatments physicians can recommend. As AI migrates toward becoming the standard of care, the diagnosing or treating physician is likely to become incentivized to follow the recommendation of the AI integrated device, which may in turn detract from the physician’s own independent medical judgment that has traditionally been a key factor in the treatment process.

Similarly, AI is likely to impact a patient’s autonomy in making their own medical decisions. As AI becomes the standard of care, health insurance companies are likely to follow suit and amend the types of procedures and treatments that are “covered” or “medically necessary” to include only those that are AI recommended procedures. While this example may be extreme, it presents an interesting ethical dilemma that is likely to be debated moving forward — do humans really want give up the ability to make their own medical decisions and allow computer programs to routinely make decisions that concern their health and well-being?

In any event, AI will continue to grow in the medical field and will alter existing workflows, paradigms and relationships. The radiological field will be at the forefront of this growth and will likely face uncertainty in many regards as the medical and legal communities grapple with how to integrate this new technology.

Editor's note: Joe Fornadel, J.D., focuses his practice in the areas of pharmaceutical and medical device litigation, as well as product risk assessment and regulatory compliance. He may be reached at [email protected].

Wes Moran, J.D., focuses practices in the areas of products liability and complex commercial litigation. Since joining Nelson Mullins in 2016, he has been engaged in the defense of pharmaceutical and medical device companies in both state and federal court. He may be reached at [email protected].

Established in 1897, Nelson Mullins has more than 800 attorneys and government relations professionals with 25 offices in 11 states and Washington, D.C.. For more information on the firm, go to www.nelsonmullins.com.

These materials have been prepared for informational purposes only and are not legal advice.

September 24, 2025

September 24, 2025