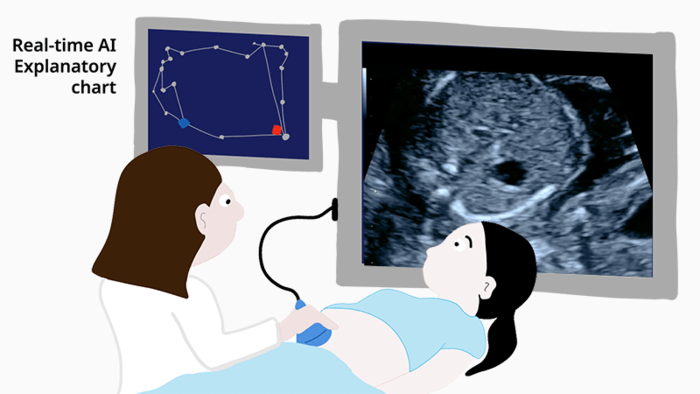

April 20, 2022 – Researchers from the RIKEN Center for Advanced Intelligence Project (AIP) and colleagues have tested AI-enhanced diagnosis of fetal congenital heart disease in a clinical setting. Both hospital residents and fellows made more accurate diagnoses when they used a graphical interface that represented the AI’s analysis of fetal cardiac ultrasound screening videos. The new system could help train doctors as well as assist in diagnoses when specialists are unavailable. The report recently appeared in the scientific journal Biomedicines.

Congenital heart problems account for almost 20% of newborn deaths. Although an early diagnosis before birth is known to improve the chances of survival, it’s extremely challenging because diagnoses have to be based entirely on ultrasound videos. In particular, subtle abnormalities can be obscured by movements of the fetus and the probe. Experts can screen the images very well, but practically, the vast majority of regular ultrasounds are only screened by attending residents or fellows. To combat this problem, researchers led by Masaaki Komatsu at RIKEN AIP have been developing AI that can learn what a normal fetal heart looks like after being exposed to thousands of ultrasound images. Then, it can make diagnoses by classifying the ultrasound videos as normal or abnormal.

The system has done well in the laboratory, but making it work in a real-world setting brings an entirely new set of challenges. As Komatsu explains, “It’s difficult to build trust with medical professionals when the decisions made by AI take place in a ‘black box’ and cannot be understood.” The new study tested an improved explanatory AI system that allows doctors to view a graphical chart that represents the AI’s decisions. In addition, the charts themselves are generated through another round of deep-learning, which improved AI performance and allowed doctors to see if the abnormalities are related to the heart, blood vessels, or other features.

Experts, fellows, and residents were given the same sets of ultrasound videos and asked to provide diagnoses twice, once without the explanatory AI and once assisted by the graphical representation of the AI’s decision. The examiners were not given the actual AI decision, which is simply a numerical value. The researchers found that each group of doctors made more correct diagnoses when using the new AI-based decision charts. “This is the first demonstration in which examiners at all levels of experience were able to improve their ability to screen ultrasound videos for fetal cardiac abnormalities using explainable AI,” says Komatsu.

A closer look at the results provided some surprising findings. The least skilled examiners—fellows and residents—became 7% and 13% more accurate, respectively, with the AI’s help. While experts and fellows were able to make good use of the AI, residents were still about 12% less accurate than the AI alone. Thus, in terms of clinical application, the AI was most useful for the fellows—who happen to be the ones who usually carry out fetal cardiac ultrasound screening in the hospital.

“Our study suggests that even with widespread use of AI assistance, an examiner’s expertise will still be a key factor in future medical examinations,” says Komatsu. “In addition to future clinical applications, our findings show maximum benefit from this technology could be achieved by also using it as part of resident training and education.”

For more information: Medical Professional Enhancement Using Explainable Artificial Intelligence in Fetal Cardiac Ultrasound Screening

March 31, 2025

March 31, 2025